Spark streaming: Fixing all executors not getting jobs

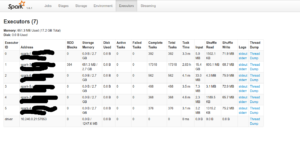

28 Oct 2016I was working on a feature recently which needed a streaming job that runs 24×7 and processing 100 million rows per day. The spark web ui is a wonderful tool to look at how things are running internally. While debugging I noticed that the streaming jobs were getting allocated to only one machine. Spark has a set priority to dispatch jobs to the executors based on proximity (on the same host, in the same pool etc) and if they complete the job within a fixed interval then all the jobs are sent to the same executor.

spark.scheduler.mode FAIR spark.locality.wait 100ms

By making the above changes to spark-defaults.conf you can force spark to dispatch the jobs to other nodes. You might have to tweak spark.locality.wait a little so that it suits your needs.